Elon Musk's Grok Imagine AI video generator finds itself in the spotlight for generating explicit deepfake videos of Taylor Swift, raising alarms about the technology's design and potential exploitation of individuals' images. Clare McGlynn, a law professor involved in drafting legislation to criminalize non-consensual deepfakes, claims the content produced by Grok Imagine stems from a "deliberate choice" rather than accidental misogyny.

A recent report by The Verge reveals that Grok Imagine's "spicy" mode produced explicit clips of the pop star without user prompts. McGlynn emphasized the issue, stating that the AI generated pornographic content without prior request, revealing a deeper, systemic bias in AI technologies. XAI, the company behind Grok Imagine, has yet to respond.

The problem isn't new; Swift's likeness has previously been misused in deepfakes that went viral, echoing a broader concern about the safety of women's images online. Testing the AI's capabilities, The Verge's Jess Weatherbed described how an innocuous input led to shocking results, including videos where Swift is depicted in revealing outfits without any request for such portrayal.

Moreover, proper age verification methods that were mandated by UK law this July were reportedly absent when Weatherbed accessed Grok Imagine. This raises questions about the compliance of generative AI platforms with safety regulations designed to protect users, especially minors. Ofcom, the media regulator in the UK, noted the evolving risks presented by generative AI tools and is working on enforcing necessary safety measures.

New UK legislation currently prohibits generating pornographic deepfakes, especially in the context of revenge porn or when involving minors. Proponents, like Baroness Owen, are urging the government to expedite the implementation of laws that would protect individuals from unauthorized usage of their images. A spokesperson from the Ministry of Justice condemned the creation of explicit deepfakes without consent, reiterating the need for swift legislative action against such harmful practices.

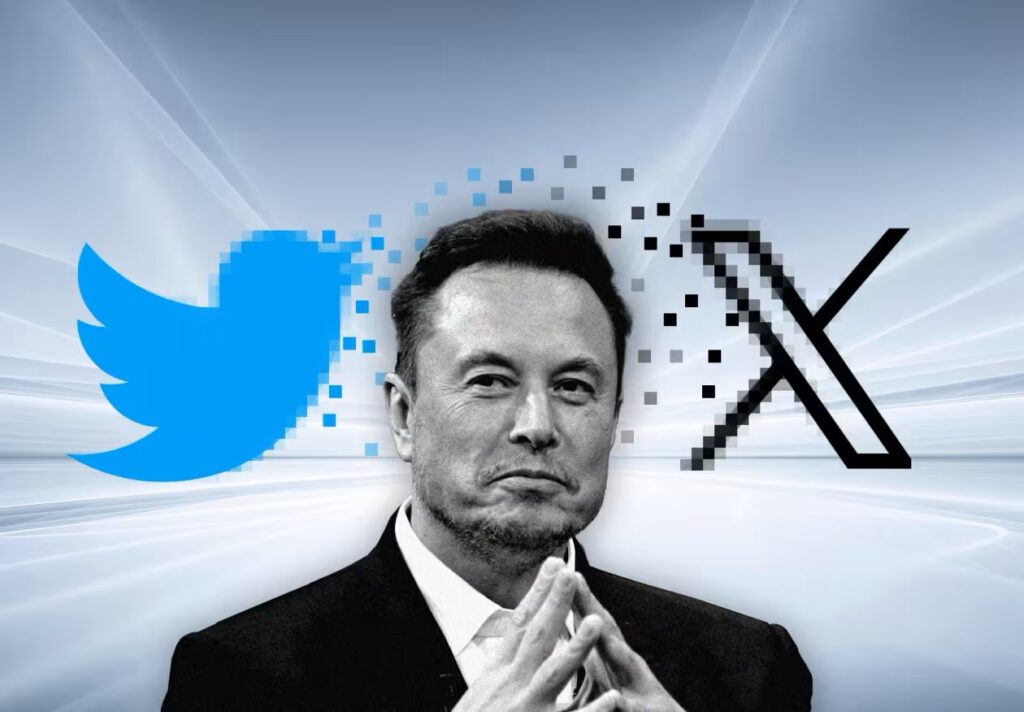

Following the viral nature of an explicit deepfake featuring Swift earlier this year, X had temporarily suspended searches related to her name, asserting their commitment to remove the content and penalize those responsible. The Verge team selected Swift for testing precisely because of her previous experiences with deepfakes, with the expectation that appropriate protective measures would have been established.

Representatives for Taylor Swift have been contacted for comment regarding the incident, as calls for stricter regulations continue to grow in the realm of artificial intelligence and deepfake technologies.