Elon Musk's AI company, XAI, is facing significant criticism as its video generator, Grok Imagine, has reportedly created sexually explicit clips of pop star Taylor Swift without user prompts. Clare McGlynn, a law professor specializing in online abuse, argues that these occurrences reflect a "deliberate choice" embedded in AI technology rather than mere coincidence. "This is not misogyny by accident, it is by design," McGlynn stated, leveraging her expertise in drafting legal frameworks aimed at criminalizing pornographic deepfakes.

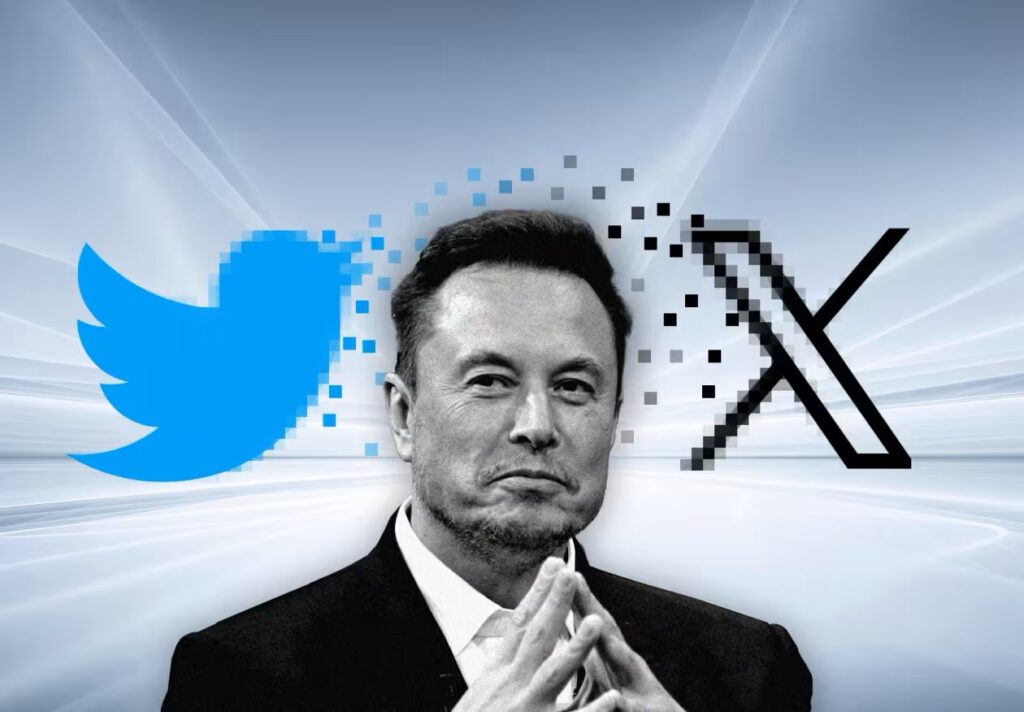

According to The Verge, Grok Imagine's "spicy" mode produced fully uncensored videos of Swift seemingly at random, raising serious alarms regarding the absence of adequate age verification methods following new UK laws implemented in July. XAI has yet to respond to requests for comment, despite the company's stated policy that prohibits the portrayal of individuals in pornographic contexts.

McGlynn emphasized that the production of such content without user prompts exemplifies the inherent misogynistic biases latent in many AI technologies. "Platforms like X could have prevented this if they had chosen to, but they have made a deliberate choice not to,” she asserted, criticizing the lax measures governing these technologies.

This incident is not the first time Taylor Swift’s likeness has been exploited; similar explicit deepfakes went viral earlier this year, with millions of views across platforms like X and Telegram. Testing Grok Imagine, a Verge staff member, Jess Weatherbed, observed that a simple prompt resulted in Swift being rendered in explicitly compromising positions, demonstrating a concerning lack of safeguards.

The discourse surrounding deepfake technologies highlights the urgent need for effective user age verification. While UK regulations demand robust verification processes for platforms displaying explicit content, evidence suggests that Grok Imagine operated without sufficient measures. Ofcom, the media regulator, is monitoring GenAI risks to children and is focused on ensuring tech companies adhere to their responsibilities.

Currently, UK laws outlaw the generation of pornographic deepfakes when used in contexts like revenge porn or child exploitation. However, experts, including McGlynn and lawmakers like Baroness Owen, stress the importance of expanding legal definitions to encompass all non-consensual deepfake creations, advocating for swift legislative action.

A representative from the Ministry of Justice condemned the production of explicit deepfakes created without consent, labeling them as harmful and degrading. Previous incidents involving Taylor Swift led X to temporarily halt searches for her on their platform, emphasizing a growing commitment to the removal of non-consensual content.

As discussions intensify around the regulation of AI technologies, Taylor Swift's representatives have been contacted for further comment, signaling that accountability for such misuse of technology is increasingly coming under scrutiny. The evolving landscape calls for frameworks that prioritize the protection of individuals' rights and well-being against AI-generated exploitation.

According to The Verge, Grok Imagine's "spicy" mode produced fully uncensored videos of Swift seemingly at random, raising serious alarms regarding the absence of adequate age verification methods following new UK laws implemented in July. XAI has yet to respond to requests for comment, despite the company's stated policy that prohibits the portrayal of individuals in pornographic contexts.

McGlynn emphasized that the production of such content without user prompts exemplifies the inherent misogynistic biases latent in many AI technologies. "Platforms like X could have prevented this if they had chosen to, but they have made a deliberate choice not to,” she asserted, criticizing the lax measures governing these technologies.

This incident is not the first time Taylor Swift’s likeness has been exploited; similar explicit deepfakes went viral earlier this year, with millions of views across platforms like X and Telegram. Testing Grok Imagine, a Verge staff member, Jess Weatherbed, observed that a simple prompt resulted in Swift being rendered in explicitly compromising positions, demonstrating a concerning lack of safeguards.

The discourse surrounding deepfake technologies highlights the urgent need for effective user age verification. While UK regulations demand robust verification processes for platforms displaying explicit content, evidence suggests that Grok Imagine operated without sufficient measures. Ofcom, the media regulator, is monitoring GenAI risks to children and is focused on ensuring tech companies adhere to their responsibilities.

Currently, UK laws outlaw the generation of pornographic deepfakes when used in contexts like revenge porn or child exploitation. However, experts, including McGlynn and lawmakers like Baroness Owen, stress the importance of expanding legal definitions to encompass all non-consensual deepfake creations, advocating for swift legislative action.

A representative from the Ministry of Justice condemned the production of explicit deepfakes created without consent, labeling them as harmful and degrading. Previous incidents involving Taylor Swift led X to temporarily halt searches for her on their platform, emphasizing a growing commitment to the removal of non-consensual content.

As discussions intensify around the regulation of AI technologies, Taylor Swift's representatives have been contacted for further comment, signaling that accountability for such misuse of technology is increasingly coming under scrutiny. The evolving landscape calls for frameworks that prioritize the protection of individuals' rights and well-being against AI-generated exploitation.